Managing Data Across Distributed Systems

Image Credits: RedHat

Image Credits: RedHat

Let me start by saying this: Doing distributed systems is hard, please don’t do this unless you absolutely need to. While this might be obvious for most of the people, I can’t begin to tell you how many times I’ve heard something like this: “We want to be on the right side of the tech curve, hence..”

One of the main issues with distributed systems done right is that there is no canonical source of truth anymore.

Having a shared database across multiple services is a very dangerous pattern. There are more than enough resources available online to support this claim so I’ll skip this part for now.

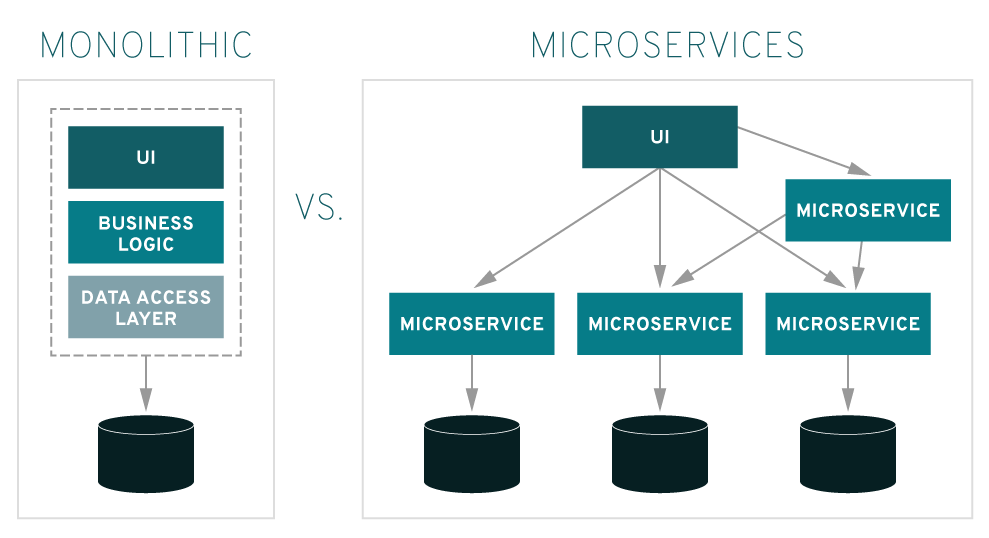

This article assumes that the distributed system in question is set up in such a manner that every service in the system has it’s own persistent & isolated data store. This means that no service can access another’s data, because it would violate the service boundary. Please note that I’m referring to distributed systems as microservices here since microservices have become synonymous with term distributed services in the modern world however SOA based systems are also distributed systems.

For all my examples, I’ll take the following setup into considerations:

A distributed e-commerce system with following services

- Customer microservice

- Products microservice

- Order microservice

- Fulfillment microservice

- Analytics microservice

There are 3 main types of operations that we do with our data. These operations are much simpler & straight-forward in a monolith. All these operations are much more complicated when attempted over distributed data. Let’s discuss all three in detail:

Access Isolated Data:

- Every piece of data is owned by a single service and single service only. No service can read that data directly from database instance directly except the owner service.

- For all intents & purposes, the owner service (not the database) acts as a system of record for it’s owned data.

- External access to isolated data is only possible through the published service interface provided by the service which is the owner of the data.

- For example, Fulfillment service has a new request to ship out an order, it will get the relevant order data it needs not from the database but by one of the following available methods:

- Synchronous look-up: Get data via service interface provided by the order microservice (this method is synchronous & real-time )

- Read-Only Cache: Get data from available caching systems

- Global Cache: Such caches need to be updated as per events occurring in the order microservice but only the order service has write access to the cache. This can act as a global shared cache for all the services which need order data.

- Another possible method is for fulfillment service to maintain a local cache copy of order data for whatever fields it requires. In this approach, order service needs to provide async event hooks for data updates which the fulfillment service will have to subscribe to.

Joins:

- Joins are very easy to do in a monolithic system.

Joining data across distributed systems can be achieved in either of the following ways:

Joining data in client application:

Let’s consider a case where analytics service needs access to data of all customers and their orders

- Fetch all customer data from customer microservice interface

- Fetch all the order data from order microservice interface using customer data

- This approach works best for 1:N join

Materialized Views:

Let’s consider a case where analytics service needs access to all orders & products (an order can have multiple products & one product can be a part of multiple orders)

- Getting this data in real time will be too expensive so approach number 1 becomes unusable.

- We can have a order-product microservice which subscribes to updates from both order microservice and product microservice

- Maintain a local store of denormalized order ↔ product data within order-product microservice, essentially maintaining a cache of joins

- This approach works highly requested data with M:N joins

Transactions:

- Transactions are much easier to do in a monolithic system

- Distributed transactions are much harder to do, especially if you want both ACID & serializability. (Consider CAP theorem).

- Google has been able to get closest to achieving a perfect distributed transactions system (what Google did to get there is very hard & very expensive to do & is not possible for the masses). For reference, you can see Google’s white paper on this here.

- Below are the two most broadly used approaches to achieve whatever best version of distributed transactions that we can:

- Two Phase Commit (2PCs) : This protocol is a distributed algorithm that coordinates between all the processes that need to participate in a distributed transaction to decide upon whether to commit or abort the transaction.

- Two Phase Commit works in 2 steps:

- Prepare phase: The initiating service, called the global coordinator or TMC (transaction management coordinator), asks relevant services to promise to commit the intended transaction. If any service is not able to prepare, TMC sends the ABORT signal to the rest of the services in the next step else it sends the COMMIT message.

- Commit phase: If all participants respond to the TMC that they are prepared, then the TMC asks all the services to commit. After all services successfully commit, the TMC considers the transaction as successful. If any service fails to acknowledge that the transaction was successful, TMC will initiate additional messages to abort the transaction.

- Major disadvantages with 2PC protocol:

- Between the end of prepare phase and the end of commit phase, all participating services are in an uncertain state, because they are not sure whether the transaction that they have prepared for has to be committed or not.

- When a service is in uncertain state and loses connection with TMC, it may either wait for TMC to come back up, or consult other participating services in certain state for TMC’s decision.

- In the worst case, n uncertain services broadcasting messages to other n - 1 services will incur O(n2) messages.

- Another major disadvantage of 2PC is that it is a blocking protocol. A service will block and lock local resources, while waiting for decision from TMC after voting. 2PC will perform very poorly when the number of services involved in a transaction grows, because of its blocking mechanism and worst case time complexity. The more participating services also means a higher chance of failure since all these services are essentially communicating over HTTP protocol.

- Because of these major disadvantages of 2PC protocol, there is a genuine & well-found concern for scalability & performance across systems implementing 2PCs. Due to this reason, Sagas are much more widely accepted than 2PCs.

- Between the end of prepare phase and the end of commit phase, all participating services are in an uncertain state, because they are not sure whether the transaction that they have prepared for has to be committed or not.

- Two Phase Commit works in 2 steps:

- Saga Pattern (Eventual Data Consistency):

- Sagas were initially presented by Hector Garcaa-Molrna & Kenneth Salem from Department of Computer Science in Princeton University in 1987. Their research paper can be found here.

- As per Hector & Kenneth “A Saga refers to a long live transaction that can be broken into a collection of sub-transactions that can be interleaved in any way with other transactions. Each sub transactions in this case is a real transaction in the sense that it preserves database consistency.”

- For example, in our business case above, a complete transaction is a Saga which could consist of four sub-transactions:

- Product Inventory Lock

- Order Creation

- Payment Against Order

- Order Confirmation

- How Saga Works: The transactions in a Saga are related to each other and should be executed as a (non-atomic) unit. Any partial executions of the Saga are undesirable, and if they occur, must be compensated for. To amend partial executions, each Saga transaction T1 should be provided with a compensating transaction C1

- We define the following transactions and their corresponding compensations for our services according to the rule above:

- Product Inventory Lock:

- Lock product inventory (T1)

- Release product inventory lock (C1)

- Order Creation:

- Create a new order (T2)

- Mark order as canceled (C2)

- Payment Against Order:

- Charge Customer’s Card (T3)

- Refund Charge (C3)

- Order Confirmation:

- Mark order as confirmed & pending fulfillments (T4)

- Mark order as canceled & cancel pending fulfillments (C4)

- Product Inventory Lock:

- Once compensating transactions C1, C2, …, Cn-1 are defined for sagas T1, T2, …, Tn, then the system can make the guarantee that any one of following sequence will be executed:

- T1, T2, …, Tn (ideal & success case) i.e. Order was placed successfully

- T1, T2, …, Tj, Cj, …, C2, C1 (0 < j < n) i.e. Some error occurred while processing the order & all changes (if any) have been rolled back.

- Saga Recovery:

- Two types of Saga recovery were described in the original paper:

- backward recovery compensates all completed transactions if one failed

- forward recovery retries failed transactions, assuming every sub-transaction will eventually succeed

- Ideally, there is no need for compensating transactions in forward recovery, assuming that sub-transactions will always succeed (eventually). This might also be the case if compensations are very hard or impossible as per your business use case.

- Ideally, compensating transactions shall never fail. However, it is hard to believe so because, in the distributed world, servers may go down, HTTP requests can fail, or some services might be unavailable for a brief period of time. Every business will have a different way of dealing with such scenario but it usually will involve making your compensating transactions idempotent & retrying that compensating transaction until it succeeds followed by some kind of human involvement if maximum number of retries also fail.

- The compensating transaction undoes, from a semantic point of view, any of the actions performed by Ti, but does not necessarily return the database to the state that existed when the execution of Ti began. (For instance, when a new order was created in the database as a part of Ti, Ci doesn’t necessarily have to delete that record, it would just set is_canceled flag on the order record to True). Another such example of a semantically correct compensating transaction is that sending an email (Ti) can be compensated by sending another email explaining the problem(Ci).

- Two types of Saga recovery were described in the original paper:

- Saga Log:

- Saga has to guarantee that either all sub-transactions are committed or compensated for, but the Saga system itself might crash in between. Saga might crash on any one of the following stages:

- Saga had received a request, but no transaction was started yet. In this case, Saga can restart as though it had received a new request just then.

- Some sub-transactions were done. When restarted, Saga has to resume from the last completed transaction.

- A sub-transaction was started, but not completed yet. Since we are not sure if the service completed the transaction or failed the transaction, Saga has to redo the sub-transaction on restart. That also means sub-transactions have to be idempotent, if not, backward recovery has to be triggered.

- A sub-transaction failed and its compensating transaction has not started yet. Saga has to resume from the compensating transaction on restart.

- A compensating transaction started but was not completed yet. Saga will have to re-attempt the compensating transaction. That means compensating transactions have to idempotent too.

- All sub-transactions or compensating transactions were done, in which case nothing more needs to be done.

- In order for Saga to be able to recover from any of the states mentioned above, we have to keep a track of the state of each sub-transaction i.e. either triggered, completed, aborted or compensated. This can be done by saving the events in Saga log (persistent data store).

- You can also think about Saga log as a state machine of atomic events.

- Saga has to guarantee that either all sub-transactions are committed or compensated for, but the Saga system itself might crash in between. Saga might crash on any one of the following stages:

- Saga Restrictions:

- In a Saga, you can have at most 2 levels of nesting for your transactions, the top level Saga & your atomic, service level transactions.

- Each service level transaction should be an independent atomic action i.e. it’s atomicity can’t be a function of atomicity of any other participating service’s atomicity guarantee.

- There is no atomicity guarantee at the top level i.e. one Saga may view the incomplete/incorrect results of other participating Sagas until the eventual consistency is obtained.

- Two Phase Commit (2PCs) : This protocol is a distributed algorithm that coordinates between all the processes that need to participate in a distributed transaction to decide upon whether to commit or abort the transaction.